TNT-Audio caught up with noted speaker designer David Smith, ex-JBL, McIntosh, KEF, Snell, and PSB. Join TNT and Speaker Dave on a journey into the heart of speaker design, its history and current trends.

Rahul Athalye (RA):Tell us about how you got started in the audio business.

David Smith (DS): It was a hobby interest when I went to college. Rather than study I would spend hours in the engineering library pouring through Audio magazine and old AES journals. My interests probably came from my Dad. He was part of the first Hi Fi hobby boom post World War II. I grew up in a house with homemade Hi Fi: a JBL D130 in a Hartley Boffle (a multi damping layer infinite baffle) with a University tweeter. A Presto turntable with a Japanese copy of the Gray tonearm, a homemade amplifier from the GE transistor manual.

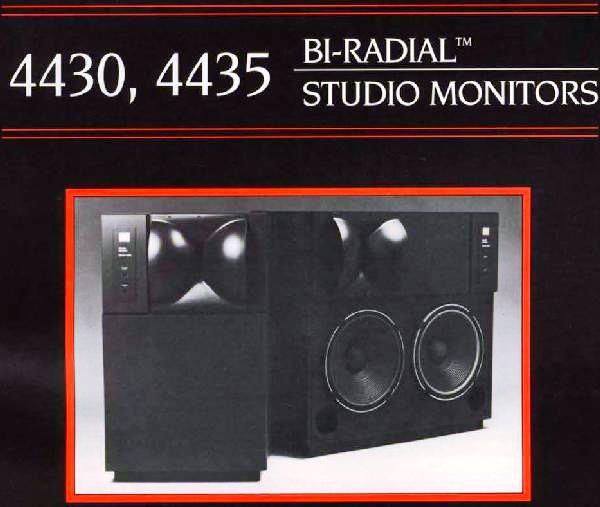

After college I got a job with Essex Cletron in Cleveland, an OEM supplier of loudspeaker drivers. When they moved their engineering location from Cleveland to the Martinsville plant (later to become Harman Motive) I cast around and got a job at JBL in California. There I designed a range of home products and some important studio monitos, including the 4430 and 4435. After that, KEF in the UK, then KEF/Meridian in D.C., then McIntosh in Binghamton, followed by a/d/s/ and Snell in Boston, and most recently psb in Toronto. In the last few years so much has moved to China that there are very few firms with real engineering or product development in North America, I now work in the digital cinema field for a firm in Toronto.

RA: You have worked for both professional audio (JBL) and hi-fi (KEF) companies. How do the design goals for the pro speakers differ from those for hi-fi speakers?

DS: Pro speakers tend to be more specialized. If a customer needs to fill a 500 seat auditorium with sound, then that’s what they are going to buy, nothing short of that performance will do. Conversely, a domestic audio customer will have more latitude in what he can use and can be swayed a lot by fashion. It becomes more about appealing to their emotions (“I want it” rather than “I need it”). Both pose an interesting challenge. Pro audio generally requires achieving more acoustic output and placing reliability over everything. This may make it feel at times like you are designing Mack Trucks when you would rather be designing a Ferrari. Designing studio monitors was very enjoyable to me as they are nicely in the middle of the spectrum. High output is important but accuracy is also a key ingredient.

In later years, at Snell and even, to a degree at McIntosh, custom installation was driving the market. For high end home theater, people wanted high performance product that could be buried into cabinets or put in-wall. I searched for ways to give that installation flexibility without compromise to performance. If you understand boundary conditions and aspects of achieving directivity (aimable waveguides, for instance) you can achieve high performance even when, at first glance, it looks like the installation aspects will force compromise.

RA: Can we borrow from pro speaker design to achieve better performance in the home? What should be the goals for a hi-fi speaker?

DS: There is a great deal of inherent overlap anyhow. If you look at it in pure engineering terms then it can be distilled down to: Let’s design a speaker. It needs to play X dB loud at X meters (defining power handling, distortion). The audience width and depth will be X (defining dispersion). Etc. Viewed in those terms the product isn’t pro or domestic, it just needs to meet a particular performance spec.

I see a lot of enthusiast interest in people using pro oriented systems at home, especially vintage cinema gear i.e. large horn systems. I certainly see the appeal but I don’t believe it gives them the best sound possible. There are compromises forced when you need a certain number of acoustic watts to fill up a large space. I’ve designed products with horns and compression driver (most notably the JBL biradial monitors). I’m proud of the engineering of those products but for myself I would rather have non horn systems at home. I don’t need the extra 15dB of output and would rather have cone and dome units that are inherently smoother in response.

RA: There has been a lot of research with regard to psycho-acoustics and Toole’s book is a great summary of this research. You have not only worked with some of the leading researchers but also contributed to the research itself. What is your take on the findings and how they apply to the home environment?

DS: Toole’s book is a groundbreaking work, worth reading over and over. It summarizes his decades of research on the subject of loudspeakers, their measurements and the applicable psychoacoustics. I think it is the new bible on sound system priorities, covering the audibility of the many measurable aberrations found in loudspeaker, including the effects of the room. If we place a speaker in the typical lively room and do a high resolution measurement, the result is such messy resonant picture that we can’t possibly discern whether it should sound good or not. Over the years we’ve developed a lot of approaches to smoothing out the view to simplify the picture, but without having any real justification for these approaches.

Toole’s classic paper, published in 2 parts in 1982 (part 1 and part 2), had a carefully run listening panel rank order 20 loudspeakers for preference and quality and at the same time made a large variety of measurements on them. It showed which measurement correlated with listener preference and which didn’t. This is great stuff for a speaker designer because you can ask: “what should I concentrate on when designing speakers”. Everything costs money and it is a competitive market. Should I spend money to get the phase response flat? Should I add extra components to flatten the system impedance curve? How low does distortion need to be? In other words, what measurable parameters correlate with our subjective impression?

This is a fundamental difference between commercially built speakers and DIY efforts. The enthusiast constructer can pick any design aspect and beat it to death. But if you are trying to survive in the market place you need to think about “bang for the buck”. Do your cost choices give the end user an audible benefit? Or are you creating straw men to knock down, say, reducing distortion to levels way lower than audible, over a misguided belief that that aspects overrides others.

Well, what I think Toole’s early research shows is that axial frequency response is the number one criterion. Power response, or overall directivity, seems to be a poor correlation with subjective impression. Why this is important is because it shows how room curves (strongly influenced by later arriving off axis response) can be misleading. The larger the room the more power response determines the room curve and the more misleading they will be. Still, I think he has drifted slightly from some of his earlier findings. In spite of what Toole’s early works clearly showed he is now placing importance on room curves and extending that from small rooms to large rooms as well.

I’m currently on one of the SMPTE committees that is looking to replace the X Curve approach to Cinema equalization. For years we’ve known that going into a large space, plunking down a microphone, feeding pink noise into the speakers and adjusting to flat response gave bad results, it is always too bright.

Now what does that mean? Something that measures flat sounds too bright? This isn’t an issue with amplifiers or record/playback systems, where flat sounds flat. I think there are a lot of clues to the reason for this, it is tied into human hearing and our ability to focus on earlier arriving sounds while ignoring later arriving sounds, but this is still a new concept to many in the industry.

With domestic listening rooms, the difference between the anechoic performance and the steady state, in-room performance is fairly minor for upper frequencies. But as the room gets to auditorium or cinema size, the differences enlarge and the steady state curve becomes very misleading.

There are a number of studies, by Kates, Salmi, Lipshitz and Vanderkooy, Bech and others, that suggest that we judge frequency balance with largely a time windowed approach. Late arriving sound is ignored. Also, this time window is long for low frequencies and short for high frequencies. In effect it is the steady state or room response for low frequencies and typically just the direct (anechoic) response for high frequencies. At mid frequencies it might contain the first floor or back wall bounce, but later reflections are generally under the level required for audibility.

“Wouldn’t it be nice to have a measuring system that perfectly mimicked human hearing and the way we perceive frequency response? It could take all the subjectivity out of it.”

Viewing perception this way answers a lot of questions, including why we need to roll off the response of a system in a large room: the early response is inherently brighter than the later response, due to rising speaker directivity, rising room absorption, even the absorption of the air. Flat steady state response would give very bright early sound, and so we reject it.

Why this has always fascinated me as a speaker designer is that it holds out the promise of our being able to design speakers that are perfectly balanced, if only we can figure out how our hearing works. Remember, if we accept as our goal that the speaker shouldn’t add anything, it should be a neutral lens for the recorded sound to come through, we still have to figure out what neutral or flat means? Is it flat anechoic response, flat in-room response, flat power response, flat time windowed response? In smaller domestic listening rooms the direct response from the speaker and the room response (including all reflections and reverberation) aren’t that far apart, perhaps 2-3dB of shelving in the room response when the direct component is flat. As rooms get bigger it becomes a major issue and steady state curves need to roll about ten dB (the Cinema X Curve).

My current approach is to design for flat anechoic response for midrange and up and then see how the low end interacts with the room, do a lot of listening and fine tuning until it seems right across a broad spectrum of software. Still, there is always a feeling that a little more tweaking could give a better result, and a suspicion that I am tuning to give a pleasant result with music I like, rather than achieving verifiable accuracy. Wouldn’t it be nice to have a measuring system that perfectly mimicked human hearing and the way we perceive frequency response? It could take all the subjectivity out of it.

RA: What is directivity? Do we want to control it? And why? (How low do we want to control it? Vertical, horizontal?)

DS: No speaker is perfectly omnidirectional. They all radiate their energy in some particular spatial pattern. This pattern invariably varies with frequency as well. It turns out that some amount of directivity is a good thing as we would like to have some distance between the strength of the direct sound arriving at our ears and the later reflections coming from the various wall surfaces around us.

We need to look at this in 2 levels. General directivity relates to the ratio of energy a speaker radiates on axis to the total energy off axis. Pro products quantify this with measurements of Q or directivity index (d.i.). A common desire in a PA situation is to amplify music or voice to increase its direct level without stirring up the sonic mud of the room’s reverberation. High directivity lets you achieve that. The more detailed view of directivity is the polar pattern of a system. For a given directivity you could have a lot of different polar patterns that lead to that directivity. Each can lead to good or bad frequency response at various radiation angles, even if the average response is very well behaved.

In the 80’s there was a revolution in horn design that really nailed down the horn geometries that would give constant directivity. Don Keele , who I worked with at JBL, was able to find the link between horn contours and their polar pattern. The results were the modern “constant directivity” horns. Now in a way it is unfortunate that they are called “constant directivity”. Since constant directivity only implies a relationship between axial response and power response, it sells short the actual achievement. The oldest multicell horns had fairly constant directivity but very poor polar uniformity. By this I mean that if you measured their total radiated power it was pretty close to the axial response, but if you moved off axis you saw a lot of variation. Some frequency ranges would get louder when you moved around the driver horizontally and softer when you moved vertically. Overall directivity was decent but particular response at various angles was poor. How the 80’s generation horns really advanced was that they had very constant polar response, a much harder achievement.

The distinction is important for this reason: constant directivity implies an orderly relationship between the device’s axial response and the total radiated power, but doesn’t guarantee good response once you get away from on-axis. On the other hand, a system with constant polars will hold its frequency response well off axis and achieve constant directivity too.

We have to get into psychoacoustics to appreciate the distinction. As mentioned above, the biggest question in my mind facing loudspeaker designers is “what performance parameters should we optimize?” (Conversely, “Are there any parameters we can ignore?”) We all know that frequency response is at the top of the list, but what form of frequency response? We have anechoic response, in-room response, reverberant room response, spherical response, hemispheric response, listening window response. It is always a 3 dimensional problem.

We know that axial system response is more important than any power response. Speakers need flat and smooth axial response to sound good, but they don’t need smooth power response, and flat power response is actually a detriment, making speakers sound too bright.

This leads me to think that directivity, for the sake of achieving a total radiated power of a certain curve, is not a proper goal, but smooth polar curves can allow flat response both on axis and for a range of angles away from on-axis. This can give us good response for a range of listeners or listening positions. As listeners cannot be relied upon to sit exactly on axis to a speaker (or may even have friends that want to listen to the music too!), I think this is the number one goal in speaker design.

That was a long winded way of saying the constant directivity is not important to me but uniformity of off axis response is, a subtle but important distinction.

“…. constant directivity is not important to me but uniformity of off axis response is, a subtle but important distinction”

RA: Line arrays can provide vertical directivity control. Thoughts?

DS: I did a lot of work with line arrays at McIntosh. Their speakers had used that approach for a long time before I got there. I was intrigued because the systems had interesting technical attributes but they weren’t fully understood. I ended up writing some simple programs to model them based on the geometric summing of a lot of vectors (one vector per element). The notion is pretty simple. If you have a row of 5 tweeters, you can pick a frequency and a point in space to listen from and work out the geometry of the line. There is a particular distance of each element to your ear, and based on the frequency the relative time delays would give individual phase shifts. That is where the vector part comes in: air path delay rotates the individual vectors, and vector strength drops with distance from the particular element. Add it all up and you can model the frequency response, polar pattern or whatever, of any conceivable array.

It works out that there are a number of frequency regions over which predictable phenomena will happen. Response gets narrower with rising frequency, then lobes form and bend inwards as more lobes form. Finally, at highest frequencies the vector summing gets somewhat random and the response broadens again.

The other discovery was the significant difference between near field and far field response. In the far field these arrays are very directional. In the near field there is a relationship between the height of the listener and the length of the line. In general, if you are within the end points (from top to bottom of the array) you hear strong, full range response. Get above the top or below the bottom and the response drops like a stone. If you want to minimize what the room is doing they will make a number of room reflections, especially floor and ceiling reflections, disappear. That is a good thing.

Once I found that I could model these arrays and start to understand them, I found that long arrays, say floor to ceiling length worked ideally, but mid length arrays were messy. Even staying within their endpoints they had more response variation than I was willing to accept. I explored the benefit of using a weighting profile to improve the uniformity of response, both on and off the center line of the array. The Mac XRT24 was the end result and the response characteristics were much improved over previous short lines. Interestingly, in spite of publishing the results, and in spite of the growing popularity of line arrays, I haven’t seen any high end designers use the weighted elements approach (the Keele designed CBT arrays are a very notable exception, more intended for PA use).

Our program used Fortran on an old DEC PDP11. With the explosion in computer technology, there is now some wonderful software out there for array modeling, especially now that it has become so popular for large concert PA systems.

The eXpanding Array was a concept I came up with afterwards while at Snell. I suppose the systems were an evolution of work I had done on THX certification. THX was pushing higher vertical directivity but most of the licensees would just stack a pair of tweeters, or perhaps 2 tweeters and 2 woofers. The axial response could be fine and the directivity was higher, so room effects would diminish somewhat, but the response as you went off axis was a mess.

It was important to me that I achieve a system with smoother directivity and without the usual response nulls off axis. At Mac I did a THX system with 3 tweeters in a short row, not a line array so much as just a short multi-element array. If the center tweeter was used for its full range and the outer tweeters were progressively rolled off I could get something that was lobe free. It was directivity without “pain”. At Snell I just extended that over a number of sections and a wider frequency range. I was using Peter Shuck’s early Xopt crossover/system modeling software and was finding ways to stack elements tight and roll off each section in particular ways that gave very well behaved directivity. I found I could achieve flat response on axis, less than a dB or so droop at +-15 degrees vertical and a totally uniform 6dB or so shelving at +- 30-40 degrees. This was exceedingly well behaved. In essence, with the smooth transition from the inner tweeter to adjacent mids, to surrounding woofer, you are making a system that has an effective length proportionate to radiated wavelength. Looking for a marketing hook, we called it an eXpanding Array, meaning that its effective length expanded as you went down in frequency (that seemed more positive than it contracting as you go up in frequency!)

So with JBL I worked with constant directivity wave guide devices, at McIntosh with medium and long line arrays and at Snell with highly optimized symmetrical arrays. Of the 3 approaches I think the symmetrical array is best. Response is very well behaved and the directivity increase is about right, not as extreme as it is with the long arrays.

RA: Dipoles provide directivity control. What do you think of the open baffle design school?

DS: I’m a little bit bemused by it all.

I love the DIY speakers movement, but I sometimes feel that people just like to self impose big challenges just so that they can tackle them. We seem to like to get engrossed in pursuing any single minded approach, as it appeals to our idealism. So a pretty figure 8 polar pattern looks like a very good thing (as does a flat phase curve, or ultra high efficiency, or full range crossover-less speakers, etc).

There is an argument to be made that higher directivity is a good thing, reduces the interaction between the speaker and the room. Yet we are discovering that all room reflections are not created equally. Lateral reflections from the side walls of your room tend to give a nice sense of spaciousness and envelopment. They tend not to upset our sense of system frequency response. On the other hand, reflections from the wall behind a speaker are hard for our 2 ears to separate from the direct sound. Rather than spaciousness they add response coloration. As such the dipole radiation pattern is kind of a poor choice. It will tend to increase the rear wall bounce and diminish the side wall bounces.

You can get around this to a degree if you toe in your dipoles considerably, but I really doubt that most dipole owners do that. It seems that dipole or figure 8 advocates have made a fetish of the polar pattern and, as stated before, I care very much for flat and even response over a broad listening window but can’t get too excited about absolute directivity.

The bigger technical disadvantage to dipole systems is the loss of LF energy from long wavelength cancelation. Unless your baffle is quite large you will have a first order roll off for bass frequencies. Most designers equalize around it but the power handling loss is quite significant. For the DIY builder it isn’t a great concern, but the cost penalty or performance loss is considerable and that explains why there are few open back speakers seen in the commercial marketplace.

Still, I have seen some good theoretical work on the subject of dipoles and I don’t doubt that some designers have gotten great technical results and very good sound. I just feel that the starting point is a bit misguided.

RA: I do some speaker building. The most time-consuming component I find is the cabinet. How do you design a good cabinet efficiently? What are the mantras for stuffing?

DS: I’ve spent a bit of my career in architectural acoustics and come to have a much better understanding of cabinet performance by looking at the architectural parallels. A great deal of work has gone into building and measuring walls assemblies to try and keep your neighbor’s noise out of your side of the world. This is really what we want to achieve with loudspeaker cabinets. We want sound from the back side of the speaker to stay in the box. In architectural terms we want the Transmission Loss to be high.

Now energy loss through the cabinet walls isn’t the problem so much as that it isn’t a uniform loss. Every cabinet wall will have numerous panel resonances and at those resonances the panels become essentially transparent. As this is a narrow band phenomenon it will have a long time signature and can have an audible effect out of proportion to its energy level.

Cabinet construction frequently gets to the heart of audiophile beliefs and common misunderstandings. In audiophile circles, if a little wall thickness is good then a lot is always better. The physics are actually at odds with that. We need to lower the Q of cabinet resonances and higher mass or higher rigidity diminish the effect of any damping we apply. Damping is the key and we want a high ratio of damping material to wall mass or rigidity. The upshot is that thicker cabinet walls will always raise the Q of resonances and make their damping harder to achieve. Raising the resonance frequencies with more rigid walls will seldom get them above audibility, more likely they will just move into a range where they are more audible. This is at odds with many audiophiles understanding so it tends to lead to spirited arguments on the forums, but the physics is clear.

“In audiophile circles, if a little wall thickness is good then a lot is always better. The physics are actually at odds with that”

Much of the understanding on this topic comes from the BBC research, especially of Harwood. This brings us around full circle so it is worth discussing. You asked about professional products and their influence. In the 60s and 70s the BBC found that with studio monitors, commercial offerings were inadequate and so they felt the need to design their own. These were very pure, purposeful designs. The brief was to create a range of monitor systems by focusing primarily on neutrality over all else. The BBC knew that the music mixing process is fundamentally about taking your microphone feeds and using all the tools at your disposal (equalization) to create a product that has a very particular sound that you want to achieve. Now you are judging that sound via a pair of studio monitors that are not directly in the recording chain. In truth, if those monitors are colored you end up incorporating the inverse of their personality into your mix. That is, if the speakers are bright you will unwittingly make the mix dull to compensate.

So neutrality was key. Beyond that, the BBC monitor series was very no-nonsense. They were mostly simple 2 way systems but with great attention to resonance reduction through careful driver design, crossover design and cabinet design. As speakers were developed they could always have a listen and then walk into the adjoining studio to hear the musicians again live. This is very focusing on whether you are going in the right direction or not.

BBC research is highly regarded with Harwood and Sowter, Mathers, and others really advancing the art. To me, the designs are a model of clear thinking and of getting the basics right. Since they were meant as an internal tool they could be totally devoid of fashion or commercial pressures. I was involved in the 11 ohm redesign of the LS3/5a monitor, so I actually have a bit of a contrarian view on it. It is good but not up to the hype, in my mind (or even in the BBC’s mind). I think the LS5/9 and 5/10 were probably the high points here.

RA: Speaker design and measurement capability is now easily accessible. Many high-performance drivers (Scanspeak from the Anat Reference) are also available. The DIY crowd feels that with enough inclination, anyone can recreate what manufacturers are putting out, and perhaps even better it at a fraction of the cost. Is this true? What would your message be to someone who has set out to design the best speaker possible for themselves? Where does the sweet spot lie and where can we make compromises?

DS: I have seen some DIY designs that rival the best of what the established companies are putting out. There are a few amateur designers out there that have more ability than some (but not all) of the professional designers that I know. Also, it is amazing what affordable tools are now around. For a few hundred dollars an enthusiast can get a calibrated microphone and some measurement software, and be nearly as well equipped as a pro. Even cheap DSP tools are now available.

So there is no reason why a hobbyist can’t make something to rival the commercial offerings. As to value, you have to consider your own time. If it is a hobby and you don’t want to put a price on it, then you can build something better than you can buy for the same money. Speaker companies must not only put a price on the bill of materials of a product, but must also cover all overheads in the company and up through the sales chain. Usually the selling price is 3 to 5 times the raw parts and labor cost. On the other hand, they aren’t paying an inflated retail for drivers.

Cost isn’t really the issue. If speaker design appeals to you then do it out of passion and as a learning experience. If you put a price on your time your speakers will be way more expensive than just the parts cost. Much like restoring a car or building a hot rod, after several 1000 hours, what has the car really cost you?

RA: The market is saturated with loudspeakers at nearly all price points. Is there a way to separate the good from the bad and avoid making a poor choice?

DS:First off we should use and trust our ears. Try to find a way to do careful comparisons between known speakers and others of interest. Use material you know well (and a variety of it) and just listen for naturalness and evenness of balance. Be leery of anything that jumps out as different than the pack. If you can, use some simple frequency response measurements. If you both listen and measure, you have a great advantage over anyone that does one but not the other.

Secondly, look to the established brands of known reputation. The KEFs and Polks and PSBs and B&Ws may have the odd dud product, but for the most part they need consistently good products to survive in a competitive market. As a parallel, I follow the headphone market and am surprised by how many customers fall for marketing heavy offerings from Skull Candy and Monster over good established products from companies with long term reputations such as by Sennheiser, AKG and even Sony.

RA: What are your thoughts on the future of HiFi?

DS: I think it’s a bit like the Tale of Two Cities: “It was the best of times. It was the worst of times”

Technology allows us to do amazing things these days, but the interest in serious audio is at a real low.

As an example, I bought a small audio recorder a few weeks ago that records stereo to a memory card. Less than $100 and a wider frequency range with lower noise than any tape recorder from the old days. In general, digital audio is impressive and MP3s can sound quite good if you keep the data rate up sufficiently.

But for the most part the personal audio revolution has left people with little interest in good quality sound reproduction in the home. They are happy to come home and stick their iPod in a cheap docking station and accept whatever sound comes out. Convenience and having massive amounts of songs seems more important than listening quality. I do take hope from portable audio enthusiasts that are buying good quality headphones and even headphone driver amps, trying to achieve the best possible portable audio experience, although this seems to be a small portion of the market.

Home theater is a little better as some enthusiasts have good speakers and a good AV receiver for their theaters. But video technology is quite sexy these days and people are way more willing to spend money on a larger display than on the audio side.

DSP allows quite sophisticated room EQ, but I don’t believe the best approach to this is yet understood. This is an area of interest that I am working in now.

We’ve also seen a wholesale shift of audio manufacturing to China and much of the engineering expertise went with it. This is sad because a rich history of audio design from North America and Europe is coming to an end. These days, Audio engineering primarily exists as a spin off from computer developments.

I also lament that the loudness wars are degrading our music sources. It seems so silly to think that a CD recorded with level squished into the top 3 dB will somehow appeal more to the market, but that seems to be the mindset of many music producers. We need to start returning bad recordings to send a message to the record companies that poor audo recording practices won’t be tolerated.

Still, the potential for great sound is out there with new technology. I am also greatly impressed with the knowledge that some DIY enthusiasts now have in amplifier and speaker design.

Thank you for your interesting questions!

RA: Thank you very much for your time, David!

© Copyright 2013 Rahul Athalye – [email protected] – www.tnt-audio.com